WeatherBench: A benchmark dataset for data-driven weather forecasting

It seems that in 2020 no domain of science is safe from the AI hype. This also includes weather and climate science where publications and conferences on AI applications have skyrocketed in the last couple of years. It is understandable why such excitement exists: Since its explosion less that ten years ago modern AI, specifically deep learning, has achieved remarkable achievements for a wide range of tasks that require multi-dimensional nonlinear pattern recognition abilities. Many physical sciences deal with similar types of problems. Forecasting weather, for example, is a complex nonlinear 3D forecasting problem. Therefore, it’s only natural to ask whether AI can be used to predict weather. To answer this question, we compiled a benchmark dataset for data-driven weather forecasting, called WeatherBench.

How weather forecasting is done today

Weather forecasting using computers has a long history. In fact, the first general purpose computer in the world was already used to create a weather forecast in 1950. Since then computers have become a lot more powerful but the principles at the heart of numerical weather forecasting are still the same. The starting point are the equations describing air flow, cloud formation, radiation and many more physical processes in the atmosphere. Because it would take way too long to simulate every single cloud droplet on the globe, scientists have to make approximations of the exact equations for every process smaller than the grid scale of the model, currently around 10km for a global weather forecast. Despite these approximations, numerical weather forecasting is a huge computational effort that keeps supercomputers around the world busy. Operational weather forecasts are really good from a global point of view. However, there are certain events, for example heavy rainfall over Africa, for which state-of-the-art model still have basically no skill.

What can AI do?

The obvious question to ask is whether AI can learn from past weather data to provide a computationally cheaper and faster alternative that might even outperform physical models is certain areas. In fact, several researchers independently had the same question in mind and performed some pioneering experiments. Peter Dueben from the European Center for Medium-range Weather Forecasting, Sebastian Scher from Stockholm University and Jonathan Weyn from the University of Washington (all co-authors of this benchmark) trained deep neural networks using assimilated observations of past weather, called reanalysis datasets, to predict weather several days ahead. Their results show that deep learning is able to predict weather to a certain degree but not nearly as good as current weather models based on physical principles.

Such results have prompted some to proclaim that data-driven weather forecasting simply cannot work. However, I would argue that so far this has not been a fair comparison. The deep learning models in the three studies mentioned above had relatively simply architectures and were trained with only a subset of all the available historic data. Further, all three studies used different datasets and evaluation metrics making it very difficult to compare them.

Accelerating research with a new benchmark

Benchmarks can be great accelerators for research. Most famously, MNIST and ImageNet kick-started the deep learning revolution. We hope that WeatherBench can do the same for data-driven weather forecasting. For WeatherBench we downloaded the best available data of past weather, the ERA5 reanalysis archive, and pre-processed it to make it easy to use. We regridded the data to three different horizontal resolutions, 5.625 (32 x 64 grid points or approximately 600 km), 2.8125 (64 x 128 or 300 km) and 1.40625 (128 x 256 or 150 km) degrees with 10 vertical levels. (If this pre-processed set of data is not to your liking, the Github repository contains scripts to download and process data with your own settings.) We chose 14 meteorological variables that we thought are most important for a good weather prediction, plus several constant fields like topography.

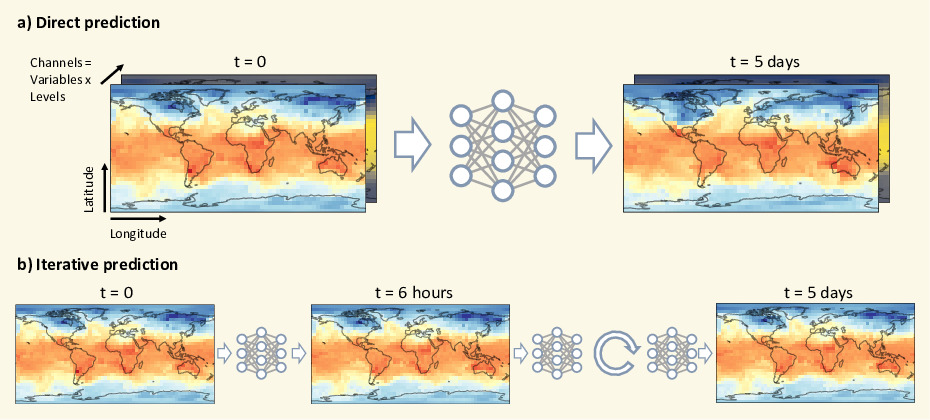

Next, we agreed on a common problem setup and a simple evaluation metric. The task is to forecast the geopotential field at 500 hPa pressure (basically the pressure distribution at 5 km height above sea level) and the temperature field at 850 hPa pressure (around 1.5 km height) 3 and 5 days ahead. This is a forecast time where interesting nonlinear dynamics occurs but the atmosphere is still reasonably predictable in a deterministic sense. Further, to allow for adequate preparation for extreme weather this forecast range is especially important. The forecast skill is measured using the root mean squared error (RMSE).

Meaningful baselines

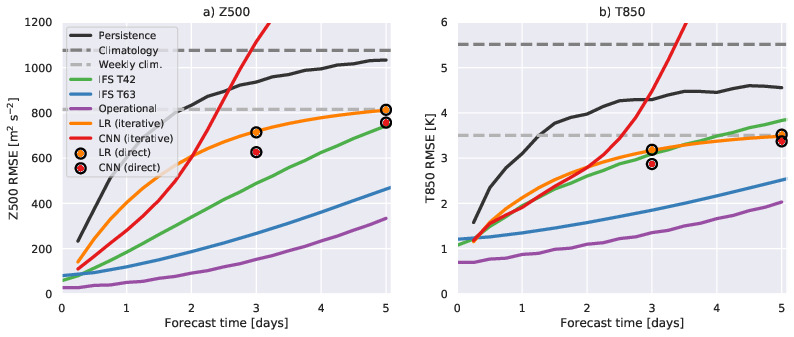

For a benchmark to be meaningful we need to have some reference forecasts to compare to. For WeatherBench we computes three types of base lines: First, simple climatology and persistence forecasts; second, simple machine learning models (linear regression as well as a fully convolutional network); and third, weather forecasting models based on physical principles. For these we chose the current gold standard of weather forecasting, the Integrated Forecasting System (IFS) run by the ECMWF. However, as mentioned above this system uses massive computer resources. For a more useful comparison we ran the IFS model at lower resolutions which should provide realistic targets for data-driven forecasts. To see how each of the baselines performs check out the figure below.

Error versus forecast time for geopotential (basically pressure) on the left and temperature on the right. Operational and IFS are physical models. LR and CNN are data-driven baselines.

The goal

The primary goal of WeatherBench is to see how good a data-driven forecast can be when the latest advances in AI and the best training dataset are combined. Can a neural network get close to the skill of current operational weather models? Only time will tell but it certainly is a hard task. But for me personally the promise in data-driven forecasting is not in predicting pressure or temperature somewhere in the middle of the atmosphere anyway. Rather, as mentioned above, there are certain, very impactful phenomena such as large thunderstorms that physical models still struggle with. Hopefully, AI can improve prediction in these areas by learning directly from observations. WeatherBench is the first step towards this long-term goal. Obtaining good forecasts for the variables we picked requires machine learning models that have a solid grasp of the dynamics of the atmosphere. A model that has developed such an understanding should provide a great starting point for more complex applications. We still have a lot to learn about the design of data-driven models and I hope that WeatherBench can provide a platform to quickly test and compare different approaches.

If this sounds interesting to you, we tried to make the dataset as easy as possible to use. You can find instructions on how to download the data and a quickstart notebook (launch an interactive demo here via Binder) on the Github page. For more details check out the full paper.

Leave a comment